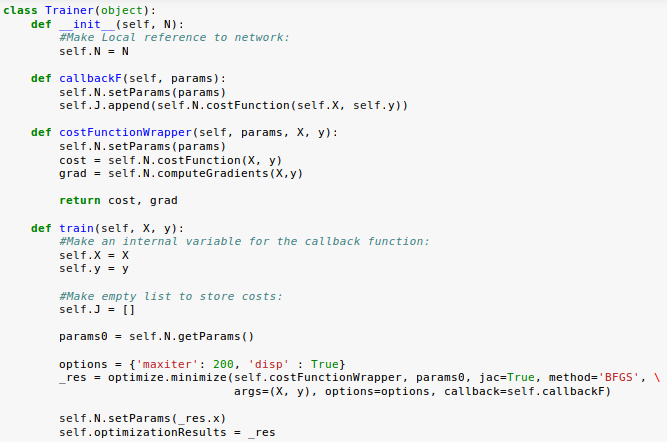

The simplex algorithm is probably the simplest way to minimize a fairly well-behaved function. The above program will generate the following output. Res = minimize(rosen, x0, method='nelder-mead') In the following example, the minimize() routine is used with the Nelder-Mead simplex algorithm (method = 'Nelder-Mead') (selected through the method parameter). The minimum value of this function is 0, which is achieved when xi = 1. To demonstrate the minimization function, consider the problem of minimizing the Rosenbrock function of the NN variables − The minimize() function provides a common interface to unconstrained and constrained minimization algorithms for multivariate scalar functions in scipy.optimize. Unconstrained & Constrained minimization of multivariate scalar functions hybrid Powell, Levenberg-Marquardt or large-scale methods such as Newton-Krylov) Multivariate equation system solvers (root()) using a variety of algorithms (e.g. Scalar univariate functions minimizers (minimize_scalar()) and root finders (newton()) Least-squares minimization (leastsq()) and curve fitting (curve_fit()) algorithms Global (brute-force) optimization routines (e.g., anneal(), basinhopping()) BFGS, Nelder-Mead simplex, Newton Conjugate Gradient, COBYLA or SLSQP) Unconstrained and constrained minimization of multivariate scalar functions (minimize()) using a variety of algorithms (e.g. This module contains the following aspects − ArgsĪ feed dict to be passed to calls to n.Ī list of Tensors to fetch and supply to loss_callback as positional arguments.Ī function to be called at each optimization step arguments are the current values of all optimization variables flattened into a single vector.Ī function to be called every time the loss and gradients are computed, with evaluated fetches supplied as positional arguments.The scipy.optimize package provides several commonly used optimization algorithms. Note that this method does not just return a minimization Op, unlike Optimizer.minimize() instead it actually performs minimization by executing commands to control a Session. Variables subject to optimization are updated in-place at the end of optimization. Session=None, feed_dict=None, fetches=None, step_callback=None, Other subclass-specific keyword arguments.

#SCIPY OPTIMIZE MINIMIZE UPDATE#

Optional list of Variable objects to update to minimize loss. Loss, equalities=equalities, inequalities=inequalities, method='SLSQP') # Our default SciPy optimization algorithm, L-BFGS-B, does not support # Ensure the vector's x component is >= 1. # Ensure the vector's y component is = 1. Įxample with more complicated constraints: vector = tf.Variable(, 'vector') Optimizer = ScipyOptimizerInterface(loss, options=) Loss, var_list=None, equalities=None, inequalities=None, var_to_bounds=None,Įxample: vector = tf.Variable(, 'vector') Inherits From: ExternalOptimizerInterface tf.(

Wrapper allowing to operate a tf.compat.v1.Session.

0 kommentar(er)

0 kommentar(er)